Recently, I was discussing the performance of matrix multiplication code with a former colleague. The code in question was taking rather a long time to multiply 2 reasonably sized matrices (1100×2300 and 2300×1100) together whereas an external maths library was somewhat quicker (think orders of magnitude) it led to wondering where the performance differences came from.

Quick Refresher

The multiplication of two matrices, m1 and m2, (Wikipedia) involves multiplying large numbers of numbers together. Each cell in the output matrix is the result of the multiplication of a row from m1 against a column from m2.

This multiplication work is inherently embarrassingly parallel (Wikipedia). However, for the purposes of this post, single threaded performance is being focused on.

Suggested Approaches

In order to generate the output matrix, multiple approaches can be used, including:

- Generating each cell in the output matrix sequentially, i.e. multiplying a single row from m1 against a single column from m2, storing the result in the output and then moving to the next cell

- Process each row from m1, subsequently looping through all columns in m2 for the appropriate row. The output matrix only containing valid entries on the final loop through

Each of these algorithms can also be made to work with SIMD intrinsics (Wikipedia).

Note that all the source code for these can be found at the GitHub link below.

Performance Figures

All timings were generated using an Intel i7-8700k @ 3.7GHz with DDR4 RAM @ 2400MHz. The input matrices were 1100×2300 and 2300×1100. This leads to ~2.78 billion multiplications (and associated additions) to be performed.

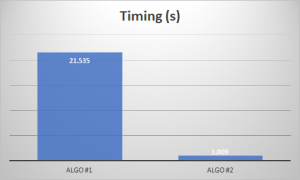

The timings for the algorithms were:

- Algo #1 – 21.535s (0.13GFLOP/s)

- Algo #2 – 1.009s (2.76GFLOP/s)

As we can see, the choice of algorithm makes a huge difference to the performance achieved. The scale factors at play here depend heavily upon the size of the input matrices. My assumption is that this is down to cache locality, but further analysis would be needed.

Looking at a SIMD (using AVX – 256bits) implementations, the following timings were observed:

- Algo #1 – 2.745s (1.01GFLOP/s)

- Algo #2 – 0.396s (7.01GFLOP/s)

Note that the SIMD implementations didn’t take advantage of any FMA instructions (Wikipedia). This is to ensure that the results were the same between architectures (avoiding rounding issues).

Conclusion

Many conclusions can be drawn from this data, including:

- Using SIMD (algo #1) gives us an 8 times speed up (in this example) and therefore it’s worth it

- Using a better algorithm and simple operators can give us even greater speed ups – ~20x

- The combination of both good algorithms and the features available in the latest processors give us the best overall speed up – ~54x

It’s clear from the numbers that what matters most is choosing the appropriate algorithm and not the latest instruction set of choice.

Where used in conjunction with the appropriate algorithms SIMD instructions can further add performance, but as we’ve seen, their use alone is not a silver bullet especially.

Further Work

I’ve only run the code with floats and against 256bit wide SIMD instructions. A further piece of work to show the performance difference between using doubles instead of floats as well as the use of the later AVX512 instruction set would be interesting to see how performance could subsequently be improved.

Additionally, the use of CUDA (given the embarrassingly parallel nature of the problem) and the availability of graphics cards with high numbers of cores would lead to rather interesting performance (hopefully). Obviously the caveats about adding SIMD instructions to your code base would apply at an even greater level if talking about using GPUs rather than CPUs.

This work has also assumed that the matrices to be operated upon are fully populated. If this was not to be the case, then one could investigate the impact of using a sparse matrix representation to reduce the total number of calculations to be performed.

Links